AI Civil Rights Act

A landmark civil rights bill for the digital age.

Our Vision

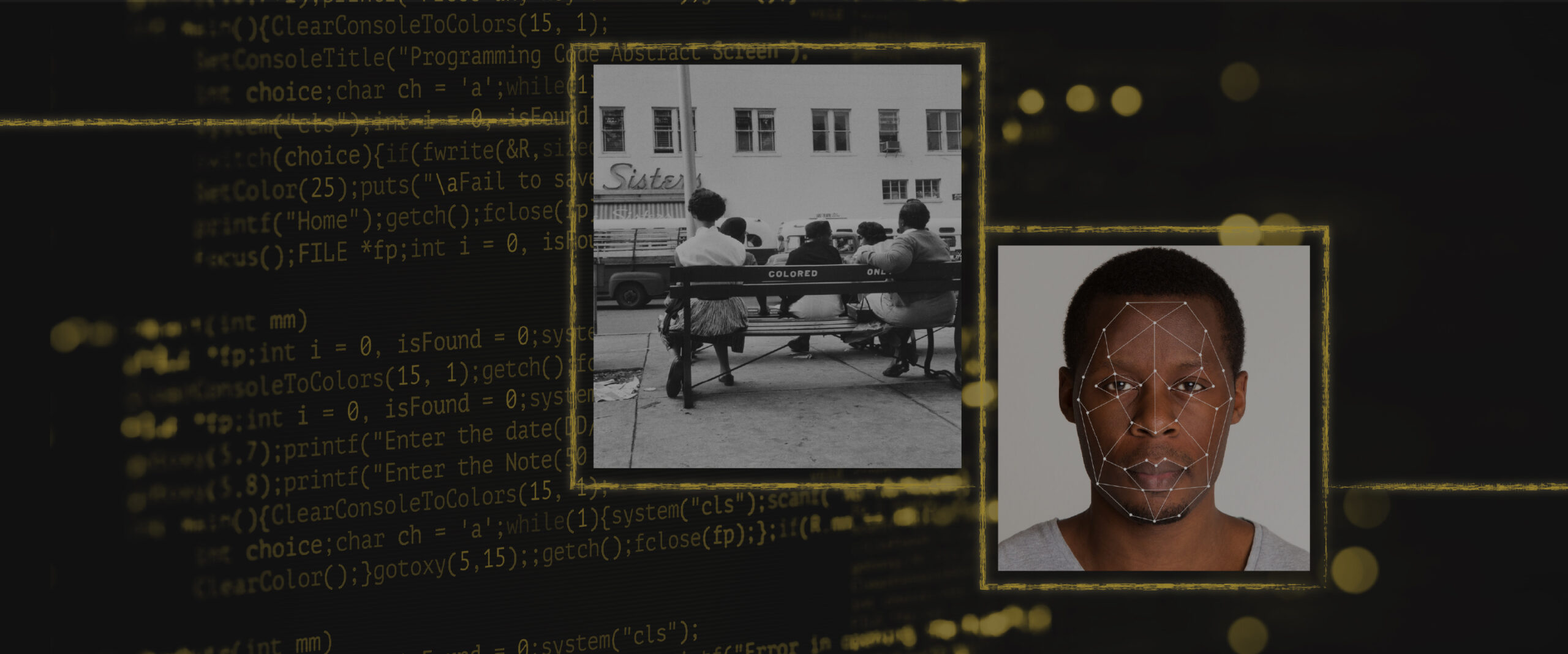

Artificial intelligence is transforming access to opportunity—shaping decisions about housing, lending, healthcare, jobs, education, and even interactions with law enforcement. But without strong protections, AI can deepen existing inequities and automate discrimination against Black communities and other communities of color.

The AI Civil Rights Act, championed by the Lawyers’ Committee for Civil Rights Under Law, ensures that innovation strengthens, not undermines, civil rights. It creates real transparency, strong safeguards, and accountability so technology serves justice, not bias.

What’s at Stake

AI tools are already delivering life-changing decisions. Many are built on data shaped by generations of discrimination. Without protections, these systems can replicate and magnify inequality:

- Mortgage algorithms denied Black applicants up to 80% more often than white applicants with similar profiles.

- Hiring algorithms have screened out women and people of color—even when they were qualified.

- Predictive policing software disproportionately targets Black and Latino neighborhoods.

- Insurance and credit scoring algorithms routinely charge communities of color higher rates.

AI isn’t neutral. And without rules, discrimination gets automated.

What the AI Civil Rights Act Does

The Act creates a strong, future-ready civil rights framework that protects people from discriminatory technologies while enabling responsible innovation.

Key Protections Include:

- No AI-driven discrimination in housing, employment, education, healthcare, credit, criminal legal system, and other key areas of life.

- Independent audits for high-impact AI systems—before and after they’re deployed.

- Transparency about when AI is used and how decisions are made.

- Enforcement power for individuals, states, and federal regulators.

- Civil rights protections that evolve as technology evolves.

Myth vs. Fact: Setting the Record Straight

Myth 1: “AI is neutral.”

Fact: AI reflects the data it’s trained on—and our systems are shaped by a long history of bias and discrimination. Without safeguards, AI can automate discrimination.

Myth 2: “If AI gets something wrong, people can just appeal.”

Fact: Most people never even know an AI system was involved. The Act requires transparency so harmful decisions can be challenged.

Myth 3: “Regulation kills innovation.”

Fact: Civil rights laws didn’t kill housing or job markets—they made them fairer. The Act supports responsible innovation that protects people.

Myth 4: “This only affects tech companies.”

Fact: AI is deciding who gets a mortgage, a job interview, healthcare approvals, or police attention. Everyone is affected—and everyone deserves protection.

Myth 5: “Companies already test for bias.”

Fact: Internal checks aren’t enough. The Act requires independent audits and real accountability.

Myth 6: “This is niche—only for tech experts.”

Fact: This is a civil rights issue. Algorithmic justice is the next frontier of equal protection.

Myth 7: “People can just sue if something goes wrong.”

Fact: You often don’t know you were harmed until it’s too late. The Act focuses on prevention—and still gives people the right to take action if harm occurs.

Press Section

- Watch the Press Conference

- Press Statement: Statement from the Lawyers’ Committee for Civil Rights Under Law on Introduction of the AI Civil Rights Act

- Press Release: Sen. Markey, Rep. Clarke Reintroduce AI Civil Rights Act to Eliminate AI Discrimination and Enact Guardrails on Use of Algorithms in Decisions Impacting People’s Rights, Civil Liberties, Livelihoods

Take Action Today

Your voice can help move this legislation forward. Members of Congress need to hear directly from the people they represent.

Tell Your Senators and Representative to Co-Sponsor the AI Civil Rights Act.

Call Congress

You can call your lawmakers directly and share your message in minutes.

📞 U.S. Capitol Switchboard: 202-224-3121

Ask to be connected to:

- Your U.S. Representative, and

- Your two U.S. Senators